Rust Photogrammetry P3P

Matthieu Pizenberg

Camera pose estimation given 3D points and corresponding pixel coordinates

2019

This project is kind of a sub-project for interactive visual odometry. In order to provide an human interaction enabling re-alignement of drifted frames, we chose to use a P3P algorithm. The idea is simple, given a number of N points with 3D coordinates in a reference frame, if we provided the associated N 2D points in another frame, it is possible to estimate the camera transformation between the reference and second frame. These algorithms are called "Perspective-N-Point" or PNP, and 3 is the minimal amount of points required for the estimation.

I've extracted this Rust implementation of P3P from the interactive visual odometry code base into the Rust Photogrammetry organization since it might be useful to others.

In the interactive web application, a user can clic three points on two thumbnails. They are displayed purple, blue and green as shown in the following image. The left thumbnail is the reference frame. Points with known depth information are displayed in red, so the user should clic near those points because the code will associate the nearest known 3D point to the clic for the reference frame. On the right is the second frame.

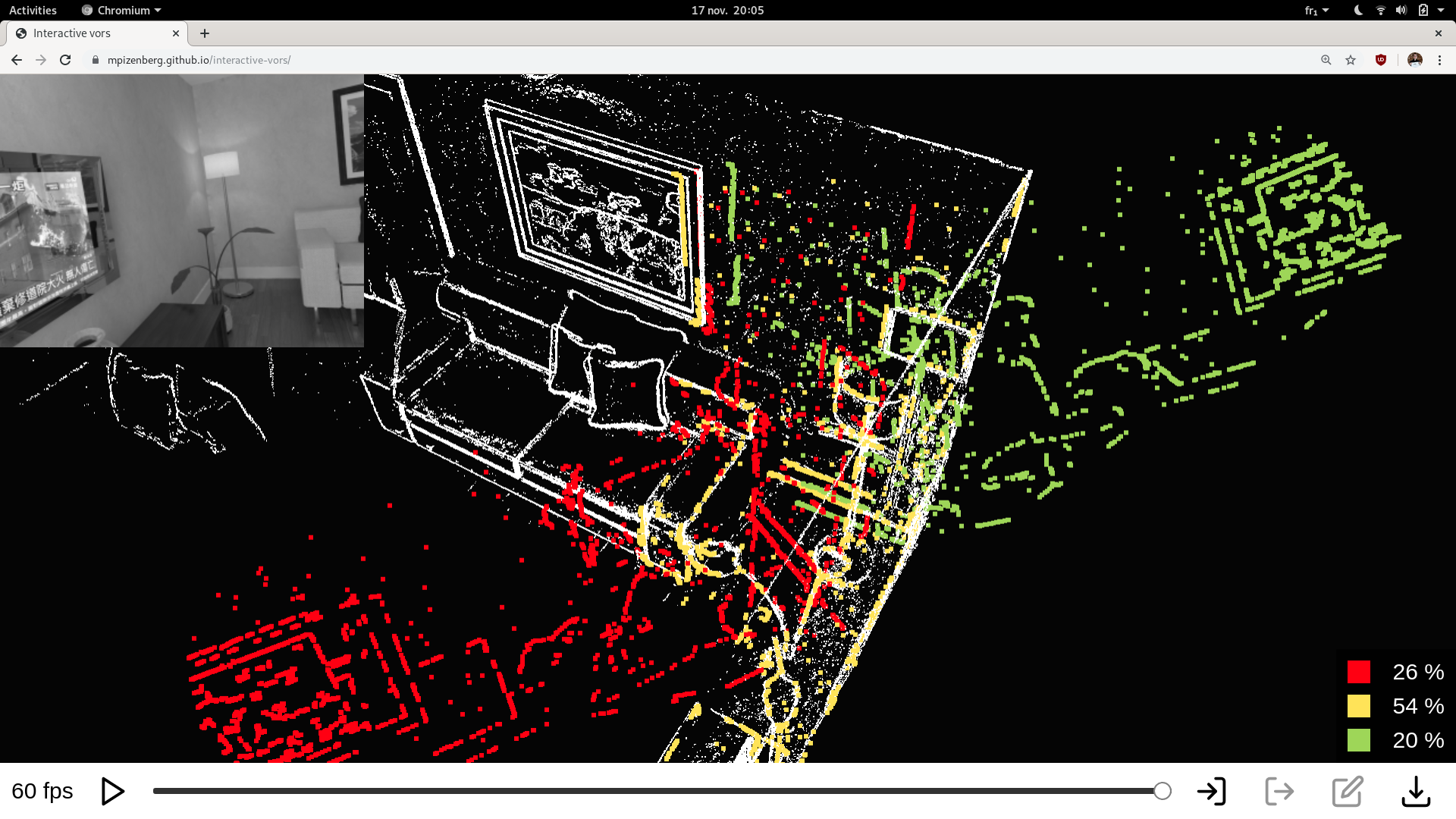

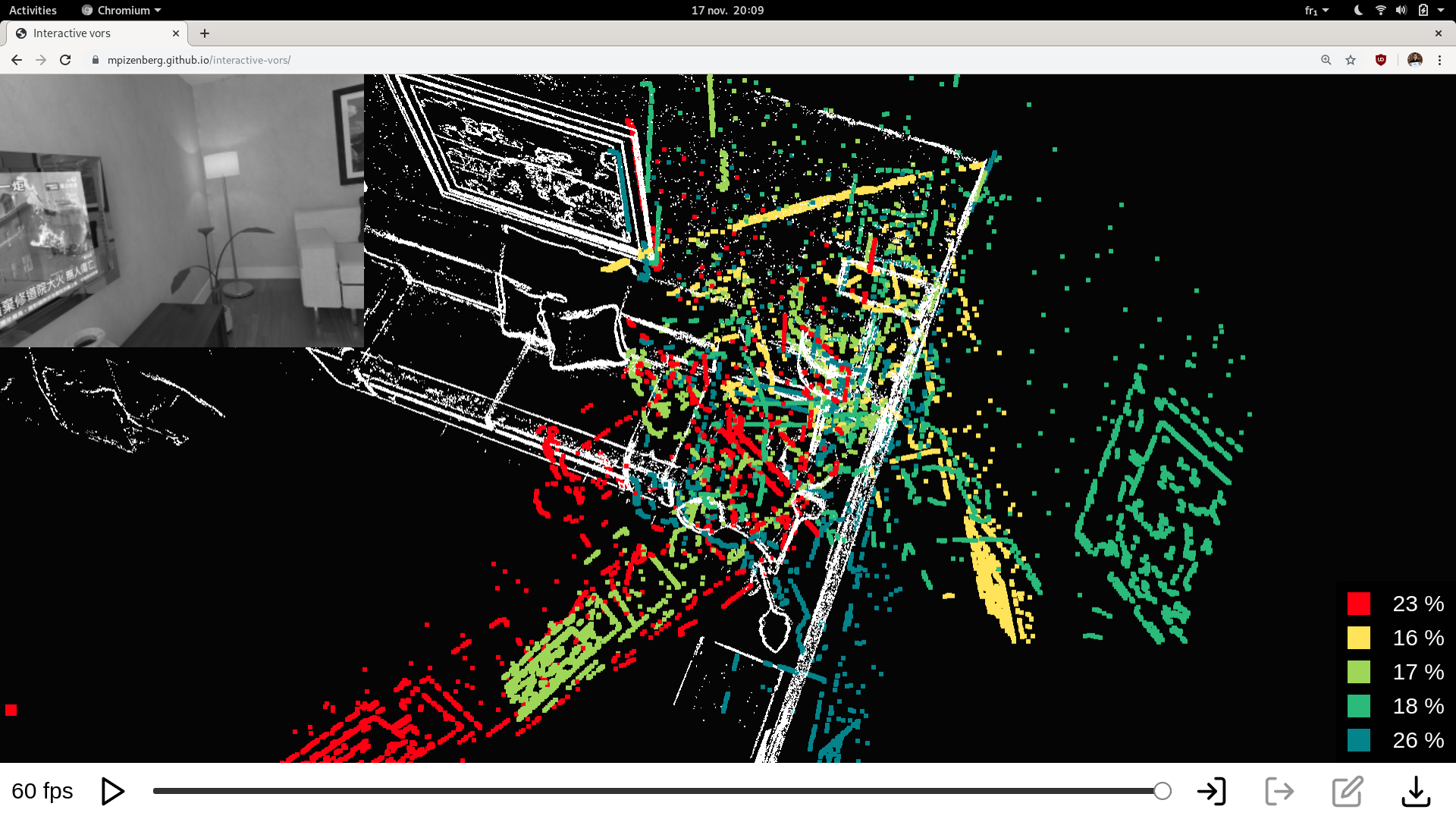

Once the third (green) point is associated in both images, we compute the possible camera transformations with P3P. There can be 0 to 4 solutions depending on the associated points. In order to pick the correct one for re-alignement, we display a projected point cloud in a different color for each potential solution. The red point cloud being the projection with the initial drifted camera pose. Below are two examples, one with two, the other with four potential solutions.

The implementation is inspired by OpenMVG implementation of "Lambda Twist: An Accurate Fast Robust Perspective Three Point (P3P) Solver" Persson, M. and Nordberg, K. ECCV 2018 (reference implementation available on the author github repository).